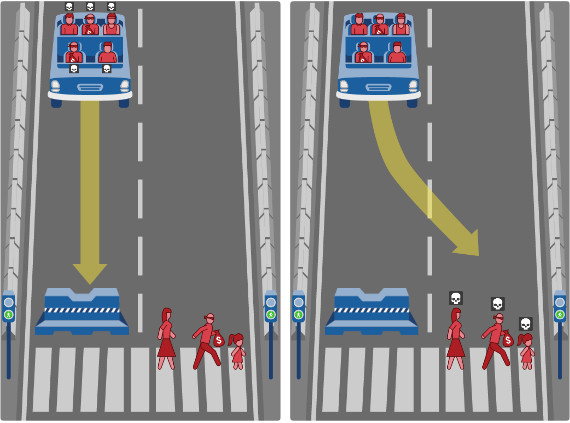

A trolley (or train) is about to run over and kill 5 people, but you can pull a lever, switching tracks, causing it to kill 1 person.

What do you do?

MIT is basing the Moral Machine on that problem and is trying to get a mass response to various scenarios in order to dictate what moral decisions a driver-less car should make.

You can even create your own scenarios.

What do you do?

MIT is basing the Moral Machine on that problem and is trying to get a mass response to various scenarios in order to dictate what moral decisions a driver-less car should make.

You can even create your own scenarios.